Guest posting by Alisa Momin

A recent study done by Health Affairs1 shows a dramatic inconsistency in hospital ratings across different rating systems. The study looked at four well-known national ranking systems:

(1) the US News & World Report “Best Hospitals” list,

(2) HealthGrades (“America’s 100 Best Hospitals”),

(3) the Leapfrog Group (“Health Safety Score”), and

(4) Consumer Reports (“Health Safety Score”).

The results of the study showed that not a single hospital scored on the top of all four lists; only 10 percent of hospitals that were highly rated in one list were again rated highly on any one of the other lists. In fact, in several cases, a hospital was nearly at the top of one list and also at the bottom of another list!

I find this to be very interesting and also highly concerning. In a time where patients are becoming more and more engaged in their healthcare and actively using the internet and reports like the ones above to choose the best hospital to meet their needs, this situation can be unnecessarily confusing and at times alarming. From the hospitals’ point-of-view, this can also hinder the process of improving their healthcare quality: they won’t know what they’re doing wrong.

So why is this happening? According to the report, we don’t have a clear and consistent definition of quality healthcare. There is no agreement between the national rating systems on which methods to use and what performance factors to measure in order to determine quality. So should we standardize healthcare ranking practices?

Representatives from the ratings companies say no. They attest that if each hospital has positive and negative aspects to their care, then patients will want to use the ratings that most directly cater to their unique needs. Ben Harder (managing editor and director of healthcare analysis for U.S. News & World Report) argued, “If just one rating system existed, [patients] would have less information to use in choosing a provider.” In addition to the impasse from ratings companies, fear—of ending up at the bottom of the list—is also obstructing the path to uniform ranking measurements.

At the very least, I believe the ranking lists need to specify exactly what makes a hospital deserve the rank it has been assigned on any list: good qualities, bad qualities, exactly which specialties are highly ranked and which are not, and why. This would build patients’ trust in the ranking systems. The information provided to patients needs to be more detailed and also comprehensive. In addition, ranking systems should consider adding sections where former and current patients can further detail their experience at said hospital, much like a “ranking blog”. Facilitating interaction between patients can further the cause of the e-patient while also helping rank more personal properties of the hospitals. If the goal is to bring patients to the best quality care they can get for themselves, let’s not stop halfway.

2014-15 US News “Best Hospitals”2

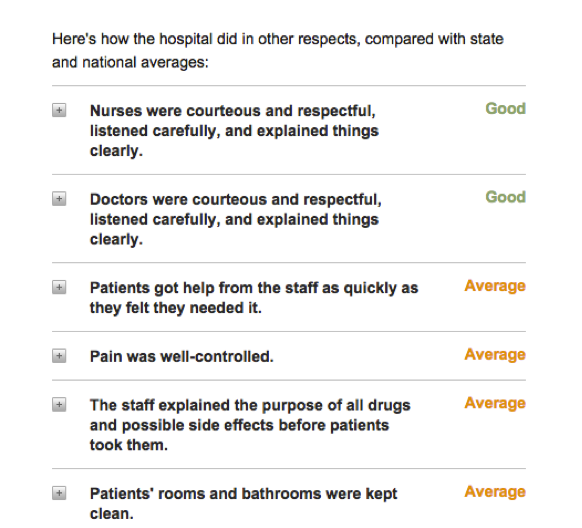

Example of US News’ “Best Hospitals”. Is this detailed enough information? Can patients easily use this information to compare and pick the best facility for themselves?3

Sources:

[3] http://health.usnews.com/best-hospitals/area/mn/mayo-clinic-661MAYO/patient-satisfaction